Artificial intelligence is the opposite of education

Or: what if there is no middle ground?

It’s been a while, and I’m sorry, but like everyone else I’m tired of AI. Dealing with the small local fires is bad enough without the horizon being constantly shrouded in smoke. It’s not as though there aren’t other crises. Austerity, redundancies, ideological attacks on forms of scholarship that don’t contribute directly to military or industrial advantage, ‘crackdowns’ on student visas and student protests (and if universities can ignore the warmongering and human atrocities, they still have to deal with the protests). Then there’s the collapse of democratic norms and public discourse, the very things that education is supposed to secure. Can’t we just agree some accommodation with AI and focus on these other, more existential issues?

Unfortunately these other issues are - to quote a recent keynote from Jennifer Sano-Franchini – ‘interconnected and co-constituted’ with AI. Where the AI industry is not actively contributing to them it is drawing energy from these other crises. Sometimes, as with Trump’s latest Bill, it is doing both at once.

So while I am tired of AI, I am even more tired of the cope. From ‘this is the worst AI you will ever use’ (readers, I did not agree) we are now deep into ‘this is the best it gets, deal with it’. Feel the mid and do it anyway. Meta’s Llama has hit a roadblock, GPT4.5 is underwhelming and Gemini 2.5 faces a backlash from angry developers. Inaccuracies and fabrications (classified in this article as ‘botshit’) are actually getting worse, perhaps due to a training diet of AI-generated slop and synthetic data. Even the true believers admit that AI is running out of data and scale won’t improve things anyway. This last link, from AI fanaticist Toby Ord, really does say the quiet part out loud.

Let me translate:

Since 2020 it has been known by the industry that scaling up AI would make catastrophically increasing demands on power and compute, while returns on model accuracy would quickly tail away. But the industry is betting that the financial returns will continue anyway, because everyone will be locked in.

Yet every time the mask slips on the ugliness, greed and rapacity of the AI industry, whenever the lies and misdirections and fabrications become unsustainable, come the grown-ups to tell us to calm down and carry on. Because polarisation is unhelpful. No matter that on one side are the four or five largest corporations that have ever existed, the biggest bubble of financial over-investment, the most powerful military and surveillance states and all the combined forces of tech hype and mainstream media, while on the other side are thoughtful people with arguments. The good academic must always plot a middle course between naked power and poor thought.

But what if there isn’t a middle of this road? What if the project of ‘artificial intelligence’ is not a road to new kinds of education - not even a slow and bumpy one – but the reversal of everything education stands for? What if, at at least in its current, (de)generative, hyper-capitalistic guise, the project of AI is actively inimical to the values of learning, teaching and scholarship, as well as to human flourishing on a finite planet? In my next few posts I consider the evidence.

The links will go live as I add them. They are, as always, imperfect thoughts. I wait for counter arguments. Please also let me know if there are other propositions you’d like me to include.

Thoughts so far:

(Not) learning

(Not) teaching (either)

(Not) caring for a learning community

(Not) education as a right

(Not) education as a democratic practice

(Not) thinking

(Not) telling the truth

Generative AI doesn’t tell the truth. According to the Association for the Advancement of Artificial Intelligence, in a factual accuracy test from December 2024 ‘the best models from OpenAI and Anthropic correctly answered less than half of the questions’ posed. Other concurrent studies also found AI outputs to be wrong at least half the time and with so-called ‘reasoning’ models things are even worse. That’s when they aren’t being used deliberately to spread conspiracy theories and encourage delusions.

I fully agree with John Noster when he says that generative AI is a ‘counterfeit’, but I want to push that observation beyond the everyday meaning of the word. Yes, AI is a fake, but more importantly, it is making (feit) in a way that is counter to - opposed to, inimical to, actively working against - the makings that people engage with together in order to flourish together. In being ‘fluent, convincing [but] fundamentally ungrounded and untethered to our humanity’, as Noster rightly exposes, AI productions extract value, attention, investment and care from the conditions in which people can decide what is true and what is worth believing. Generative AI is not making knowledge in an interesting new way, producing some unfortunate biases and incidental untruths that can be overcome by people with ‘AI literacies’. It is a mode of production that captures, devalues and finally exhausts the ways of making knowledge that belong to people and, in return, offers up only past representations, rehashed through forms of hyper-exploited and alienated data work.

‘Artificial intelligence’ itself is founded on a lie. It does not exist, or not as its key players define it. Sam Altman, for example, has said for years that the goal is a system ‘more capable than most humans across all areas of intelligence’. He announced a ‘breakthrough’ towards this goal in November 2023, confidently predicted human-surpassing AI ‘within a year’ in 2024, and in 2025 has postponed the rapture until 2029, though still confident that ‘Superintelligent tools could massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own, and in turn massively increase abundance and prosperity’, although AI was supposed to be doing that back in 2022. What has now been renamed ‘artificial general intelligence’ or ‘strong’ AI is impossible on principle, according to many philosophers and computer scientists (Luciano Floridi, Herbert Dreyfus, John Lanier, Ragnar Fjelland, Francois Chollet and Iris van Rooij among them). It is economically unrealisable, according to an entirely different group of experts (Richie Etwaru, Darren Acemoglu, Ed Zitron, and The Economist). It is unachievable with current techniques, according to most AI researchers, and recent polling has found that even if it was possible, available and affordable, most people wouldn’t want it. And yet it is in pursuit of it we are asked to put up with everything AI can offer in the present.

Behind that one big lie come a hundred lesser lies like beads in a row. AI will make work more creative and rewarding. AI will make workers more productive - but somehow that won’t mean a choice between accelerating your work rate or losing your job. AI will help students learn (‘reduces cognitive load’). AI will stop students learning (‘reduces cognitive effort’) but don’t worry, we know the difference. Don’t use AI for this, because it’s cheating. Do use it for that exactly same thing because it’s future proofing your skills. We can definitely tell when students are using AI. We aren’t using AI. We may be using AI but only when it is safe, responsible and ethical to do so. All our platforms and AI enterprise systems and agents and co-pilots are safe and ethical too and we absolutely 100% have audited that.

Big tech will be fine. It managed to push on through the MOOC lie, the metaverse lie, DNA testing scams and non-fungible tokens and crypto. Ok, the crypto lie is thriving under the Trump dynasty, but only as a manifest corruption scheme. Lying is business as usual for big tech. But education is supposed to have a rather different relationship with the truth.

Probability is part of the problem. The Bayesian probability that machine learning systems are based on does not, like the frequentist probability we were taught in school, express the probability of something being true. Rather they express the value of a particular prediction about the future, assuming that prediction were to pay off against alternative bets. Model training is ‘adversarial’ in exactly this sense, betting different states of the architecture against each other to find which produces the best, least ‘lossy’ outcome. In the world of financial speculation, where Bayesian methods have their most enthusiastic and indeed value-generating users, the bet you make does not matter so much as your power to make the market in which your bet turns out to have been the right one. Speculative financiers have bet big on generative AI, knowing that it does not and cannot produce any value in the usual sense of profit until knowledge production is substantially turned over to AI. But those investors are relying on the rest of us to make that market for them.

Education leaders who insist on the adoption of AI are actively producing a future in which the bet on AI turns out to have been the value call.

Of course education leaders may have reasons to prefer the AI future. Far more likely, though, they have become convinced that no other future is worth believing in or striving for. Like other situations where the truth is obvious but uncomfortable - and we don’t have to look very far in the contemporary milieu - there are personal costs to speaking up. But in some possible futures, the costs of not having spoken up may be high - the costs of complicity, for example. So what starts out as a wholly understandable reluctance to speak unpalatable facts becomes a commitment to producing the future in which that bet was the right one: the ‘facts on the ground’ have been transformed, the nay-sayers were mad or bad or have shut up and gone away, and we are living in the only future that was possible.

Education leaders who want to hedge their bets even a little at this point could start by telling the truth about the crises unfolding from the release of ChatGPT and other large language models. The targeting of students as users and the capture of educational researchers and policy makers has been relentless. No other group of people (with the possible exception of PR professionals) has adopted ChatGPT as thoroughly as students have or is using it so indiscriminately. We may not have answers but we could share truthfully with students that our practices of teaching, learning and assessment are being broken and that we need to find ways of continuing to learn together. We could stop pretending this is all OK.

The truth has a hard time in parts of academia, some of the parts where I feel most at home in fact. Universities - literal palaces of ‘one truth’ - have been complicit and remain complicit in projects of extraction and exploitation in the name of reason, not least in recent times through their partnerships with the AI industry and its military correlatives. But if this imperial certainty is to be overthrown, please can we hope for some more thoughtful kinds of uncertainty in its place? Some better accountability to history, some more equitable relations among knowledge cultures, some honesty in self-examination as well as rigour in methods. Can we not just put up a new tyrant to insist that truth is relative (to power) and nobody can believe the evidence of their own senses?

Because in a world of live-streamed genocide and hourly lies from the highest authorities, who does ‘post-truth’ really serve? We are not witnessing a scholarly debate about the plurality of interpretations here but a fascistic turn to revelation, authority and affect. While predictive AI is deeply implicated in the oppressive violence of Israel, China, Saudi Arabia and the US military, in state-sanctioned sectarian violence in India and in the violent deportations of Trump’s ICE, its generative branch is helpfully putting all the evidence of these crimes beyond belief. Experience is de-realised: everything smells fake. And when we append our advice to students with that small disclaimer ‘AI may be wrong’ we are teaching them that this doesn’t matter. Give up striving for truthfulness. There is no important connection between words and accountable actions in the world.

‘AI’ is a scheme of misdirection. The original Turing test and all of today’s AI benchmarks lay it down in black and white. How many people can you fool? That is literally the value proposition. And it is essentially the same question as: how many people can you enthral with your power?

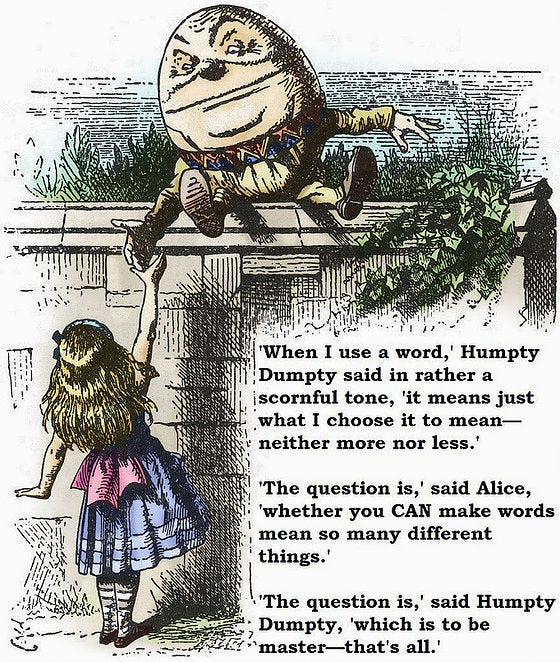

Data power, military power, political and media power, computational heft… as Alex Karp of Palantir has said (not quite quoting Humpty Dumpty), ‘AI is a weapon that allows you to win’. All power, no truth.

(Not) reasoning or explaining

Most scholarship does not aim at anything as ambitious as the truth but does aim to apply reliable methods and to explain the results. Methods are fallible but they are accountable, in the sense that other scholars can review their use and replicate or challenge their findings.

Generative models do not allow for such accountability. Their inferences are non-replicable. Their ‘black box’ architectures are constructed through trillions of discrete operations on billions of data tokens and tracing even a fraction of their hidden structure requires probing methods that are specialised, laborious, poorly understood and imprecise. On top of the models’ intrinsic obscurity there is deliberate secrecy over the data sources and the human data work involved pre- and post-training. To produce ‘chain of reasoning’ style interactions, for example, data workers are used to rate model ‘explanations’, to provide sample explanations for model refinement, and to build whole ‘explanation’ datasets for model training. User preferences are also gathered and re-used to make ‘reasoning’ sound reasonable. You thought it was just the maths talking?

Unfortunately the more models are tuned to ‘explain’ their ‘reasoning’, the more errors they introduce. So-called chain of reasoning (CoR) does not express how a model is actually ‘reasoning’ because ‘models are not reasoning’ (Apple 2025): instead, as the same Apple team demonstrated in a 2024 paper ‘they replicate reasoning steps from their training data’. CoR reports are untrustworthy on principle: they are plausible explanations for plausible responses, and since the inferences involved are more complex, they burn more compute and carbon per query as well as introducing more mistakes. With complex problems, the supposed ‘reasoning’ can collapse suddenly and unpredictably, while with simple problems the ‘reasoning’ model can continue ‘its computational wheel-spinning’ long after a solution has been reached. (I wrote about this ‘halting problem’ in an earlier post on the Turing test).

People need explanations. By explaining we develop rubrics, models, theories and schemata so we aren’t starting afresh every time we meet a new situation. In research, methodological clarity matters so we can invite others to query our reasoning. In teaching we want students to arrive at explanations for themselves, as part of building a mental world, and to test those explanations in different contexts. But we face an advancing doctrine that what these data models are doing, obscure as it is to human understanding, is a better route to knowledge – faster, more ‘objective’ and more scalable – than the old evidence-and-reason routine. Data models are vast enough to contain approximations to every new case. Data does not need to generalise. As Chris Anderson notoriously argued in Wired (2008):

Petabytes allow us to say: "Correlation is enough." We can stop looking for [explanatory] models. We can analyze the data without hypotheses about what it might show. We can throw the numbers into the biggest computing clusters the world has ever seen and let statistical algorithms find patterns where science cannot.

The ‘we’ in this sentence are not learners reaching for new understanding, or research communities trying to build useful shared knowledge, but people with ‘the biggest computer clusters’ and ‘petabytes’ of data, whose answers must now be accorded authority despite (or perhaps exactly because of) their resistance to human-readable justification. Along with post-truth, data models usher in an era of post-science, post-explanation or more broadly of post-theory in which whole cultures of human reasoning are less valued than data architectures, whose inferences people can subscribe to but never share in, still less question or debate.

Among the rebutters of Anderson’s thesis, philosopher Byung-Chul Han points out that when we give up on some correlations being more meaningful than others, we give up on reasons and reasoning altogether. Absolute information (if it were possible) would be the same as absolute ignorance:

Navigating one's way through Big Data is impossible… Dataism arises from the renunciation of meaning and connections; data is supposed to fill the void of meaning. Han (2013)

I respect Han’s philosophy but I prefer a different argument. Data models are not unreasonable, to my mind, but they are new regimes for coordinating the work of reason. Data and datasets are the products of reasoned collation, curation and labelling. The data workers involved in model refinement are making sense – interpreting, evaluating, comparing, deciding, often completely rewriting outputs. AI engineers have human sense-making in mind as they guide models towards ‘desired’ states - those that seem (to their users) reasonable. And finally, those unpaid users must endlessly check, correct and compensate for the ways that models still fall short of good sense in their contexts of use. It is in the interests of the AI industry to obscure this reasoning work behind the mask of data and algorithm, but in truth LLMs are only useable as a regime of reasoning because they involve human reasoning at multiple points.

But these disparate reasoners don’t constitute a community in the way that a university department does, or a local history group or a fan fiction site. They are separated from knowledge of their own knowledge, which becomes knowledge only within the regime of the data architecture. They are separated from each other, working on different terms, unable to reach a consensus or agree on a shared project or even to know what anyone else is doing. The low entry bar for using AI allows the industry to claim it is more ‘accessible’ than a university course or a difficult book, but beyond this low bar is a rigid division of labour and a structurally-enforced lack of visibility and mobility. At least the research community has to justify its openings and closures, its codes, procedures and rules.

Just as with ‘the truth’ we don’t have to believe that regimes of knowledge production in education are open and fair to believe they can be made more so, and that they work better (reasons are better justified, knowledge resources are better used) if they are as open, fair and diverse as possible. The open education community has promoted these values for many years, one reason why in a recent keynote I insisted that ‘AI is the enemy of open’ in education (another thought - full recording coming soon).

The AI stack is not a community. It is not amenable to dialogue, critique, consensus-building or action to challenge injustice. Its data architectures are impenetrable by nature, obscure by design, unaccountable by the ruthless prerogative of commercial power.

Technology, as Peter Thiel has opined, is ‘an incredible alternative to politics’ as a mode of power. It has made some people so powerful they can put themselves beyond reason and explanation entirely.

(Not) developing expertise

Even if they take the most transactional, neoliberal view – that the point of education is to develop economic capacity via people with expertise – education leaders should examine what the AI project means for that transaction. The goal is for expertise of the kind currently accredited by the education system to be represented, organised and operationalised through data architectures. While the jury is still out on how far this is possible, given the poor showing of these models in real-world contexts of expertise, workplaces are already restructuring workflows as data flows so that AI agents and APIs can be made to ‘work’. This redesign is not incidental. Following Stafford Beer’s ineluctably materialist logic, the purpose of a system is what it does - and as I have argued from the start, the purpose of the AI system is to redesign work, especially what is called expert or ‘knowledge’ work (though, in my view, all work is also that).

Anyone who followed ‘artificial intelligence’ through the era of ‘expert systems’ will be reasonably familiar with its failures, and may even know some of the reasons advanced for them. Most famously Herbert Dreyfus, philosopher and AI critic observed that representable knowledge is only one element of expert practice (that is, what experts actually think and do when their expertise is being put to work to produce social value). Other elements include embodied routines, situational know-how, ‘perceptual expertise’ or the way that senses become re-calibrated (experts notice different things), remembered experiences, the values and norms of the expert culture, and the ‘feel’ or flow that comes from integrating all of these fluently. Dreyfus was also interested in purpose - why certain things are worth doing and thinking about, and what animates sustained projects such as learning to become an expert. Representable or conceptual knowledge is still a crucial element of expertise - but even managing representations and concepts involves know-how, that can itself become more expert and specialised.

Now there is evidence, summarised here by Punaj Mishra, that the use of generative AI by experts can actually make them less productive; astonishingly, this is even true of that most happy user, the professional coder. Interruption to the flow of practice is a drag. Also, did I mention, generative AI is crap and monitoring the crapness is a further drag on time, attention and productivity.

Generative AI does not serve professionals well. It can be used to offload some of the standardised, repetitive tasks that would once have gone to junior colleagues. But who exactly does this serve? Not the established professionals who are expected to be more productive while ‘minding the AI’ for errors and failures; not the younger workers whose roles are squeezed out by ‘AI’ (or, more accurately, by data workers in the AI labour stack) and who miss out on valuable learning as a consequence; and not the professions whose knowledge is being siphoned into data structures that they must lease it back from. Not even the workplaces that have paid for the AI and must now pay to make their own data ‘AI ready’ and AI-secure, so having less to invest in people with talent and expertise.

The whole point of cognitive automation is to turn expensive, specialist expertise into data work, some of it to be done by experts themselves (fewer of them, working harder) and the rest by more generic workers who can be casualised. In fact as generative models fail to improve by the magic of mathematical emergence, the volume of data work being done behind the scenes is actually increasing. It is also getting more specialised, meaning that expert work is becoming data work. AI labs are ‘hiring thousands of programmers and doctors and lawyers to actually handwrite answers to questions for the purpose of being able to train their AI’, according to Mark Andreessen, who should know. Its continued reliance on human experts to pull the strings of the ‘smarter than human’ system is why Meta recently acquired ScaleAI, a datawork outsourcing company notorious for its exploitative labour practices.

Whether captured by outsourcing companies or held in the databases of business users, expert knowledge is being captured, to be put to work again by other experts - because it is only when that knowledge is put to work in human contexts and for human purposes that it acquires actual value. But more of that value is now falling towards the companies that own the knowledge-extraction-and extrusion machine.

For the whole bait-and-switch to work, expertise must be made more representable at the one end (that is data work) and more exploitable at the other end (that is the demands for greater productivity through datafied processes).

The neoliberal university ought to understand all this, since the economy is its raison d’etre. It should be able to explain why the wider economy shows so little AI impact on earnings or efficiency, so little AI-enhanced growth and productivity beyond that most capital intensive sector the AI industry itself. It should have predicted that AI adoption would be hype-driven and shallow rather than deep and structural, and that the majority of workplace AI projects would end in failure while every thinktank repeats the mantra that ‘work must become AI, so AI can work’. The neoliberal university may not have many philsophers left to explain the epistemic fallacies but it is swimming in business analysts who could advise about the economic risks. The general risks to the world economy of AI’s over-financialisation and under-delivery of value, its diversion of capital away from other economic priorities such as energy transition in favour of computational infrastructure, and its ponzi-scheme investment structure that relies on the rest of the economy conforming to AI and its demands before anyone makes any money. And the particular risk that – if it succeeds – the value of people with expertise and therefore of universities will be pushed to the floor.

Given the colonisation of expert knowledge by computation, it’s hard to see why societies and/or young people in them would continue pay for qualifications that might or might not add value to work in the expertise-data system. Major companies already prefer to gather their own evidence that applicants are ‘thinking for themselves’ and piece-rate workers qualify themselves by showing they can fulfil sample tasks before they are assigned to paid ones. Neither requires a university qualification. If data work is the best most graduates can hope for – and many students and recent graduates are doing it already - they might as well learn-to-earn within the data engine.

Some elite universities will no doubt continue to thrive in this scenario, promising innovation (from research outcomes, machine learning PhDs) and ‘top experts’ whose know-how will be propagated through the databases and architectures associated with their respective fields. People with expertise will continue to be the source of economic value and the drivers of innovation. It is mass expertise that is under threat, the aspiration of the majority of the world to become specialised and qualified, to become fluent in particular knowledgeable ways, and to secure better life prospects from that.

Look out for more links in this series! And check back soon for a relaunch of my podcasts with more ways to listen.

Thank you. A brilliant re-iteration of the most dangerous nonsense sweeping every corner of our lives. Just one recent example is the government delivering related "education" and training into the hands of Google.

Google's strap-line has somehow transmogrified into "Be evil".

And the number plate on all official Labour government cars is BPG 1SG.

Blackrock Palantir Google 1% Support Group

The slogan, "learn smarter, not harder," used on the AI-generated image at the top of your essay, reminds me of the primary marketing slogan for Google's NotebookLM until May of this year ("Think Smarter, Not Harder"). That slogan encapsulates the entire generative AI hype: these programs openly tell us that we no longer have to work hard, we just have to be "smart." And this word, "smart," is already part of the digital vocabulary of consumerism and transactional relationships with technology. We're surrounded by all sorts of smart devices that automate nearly all aspects of our lives. What's the big deal if we automate learning, i.e., eliminate it, and let NotebookLM, Claude, and ChatGPT summarize the research and write literature reviews? What's so alarming is that the AI industry continues to automate experiences and dehumanize us in the process, a trajectory that began some time ago and has become normalized.