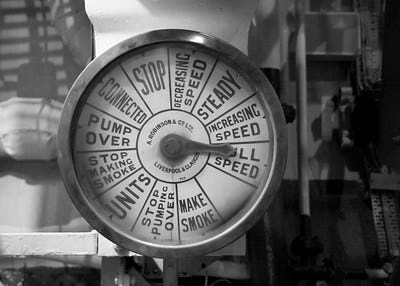

Never mind the quality, feel the speed

How AI is reshaping research and the research environment

This piece was originally part of my earlier post on ‘risks to knowledge economies’ but it seemed to be asking for its own space.

tl:dr: in this piece I look at using large language models in the research process generally, before turning to specific applications of data modelling in natural science, social science and the humanities. I include a review of a recent (excellent) meta-synthesis of research on AI in Education as an example of my general argument. Which is that the research economy is already skewed towards speed, and generative AI is dialling that up to the max, to the detriment of all kinds of qualitative, interpretive, innovative, inter-disciplinary and marginalised fields of knowledge.

Hours of reading in minutes!

Unlike students, you don’t expect serious researchers to use public, open language models to fill gaps in their knowledge. In fact scientists writing in Nature (my go-to source for generative good sense) have recommended only very limited use of such models, perhaps for polishing a writing project. But this advice is widely ignored. Other articles in Nature, from 2022 and 2023 find that over-reliance on generative models threatens a ‘crisis of reproducibility’ in scientific research. The vastness of the data sets, the unknowable and changeable training parameters of the model, the stochastic or partially random nature of its outputs, and above all the ‘uninformed’ way models are being used by researchers, all add up to a big problem for scientific results: they can’t be reproduced. And this is not a minor glitch. It removes a cornerstone of empirical method.

Increasingly often, researchers find their articles being mis-represented, and articles mis-attributed to them, and fake citations being reproduced faster than anyone can produce any ‘real’ research. The ChatGPT Impact Project is keeping track of some of these effects. The term ‘synthetic’ seems particularly apt here, as large language models contaminate entire information environments like plastic particles in the world’s oceans.

Of course research production was a shitshow before ChatGPT. Reviewing ten years of rising profits and falling quality control, a recent article on the LSE Impact blog described how the ‘academic publishing industry based on volume poses serious hazards to the assessment and usefulness of research publications’ (and, presumably, of research itself). Data-based metrics - citations, h-scores, REF scores, league tables etc etc - powerfully shape research agendas by determining what gets funded, whose careers are advanced, which research centres thrive. This economy holds back research in the global south and in peripheral fields of study. It concentrates resources in already-well-established researchers and research centres, who then have even more power to shape the research agenda. Rather than new knowledge, it favours the production of paid-for content (journal subscriptions) and proprietary metadata (indexes), to the benefit mainly of giant content mills like Scopus and Web of Science.

‘Generative AI’, injected into this profitable cycle, ramps up every one of its volume-maximising, profit-seeking effects. In ‘speeding up to keep up: exploring the use of AI in the research process’ (2022), Jennifer Chubb and colleagues found that machine learning:

boosts the speed and efficiency required in a (contested) market-driven university. Yet AI also presents concerns… how AI could miss nuance and surprise […] and how infrastructures developed for AI in research could be used for surveillance and algorithmic management.

Since Chubb expressed these concerns, researchers have been beset with new ‘AI’ apps, promising everything from instant literature reviews to help with grant applications.

GPT-4 can now be integrated with established corpora such as pubmed and Wolfram’s knowledge base, and academic publishers are queueing to sign partnership agreements with the likes of Apple and OpenAI. Other apps provide a natural language front end for research applications. The Advanced Data Analytics plug-in for ChatGPT, for example, lets you work with data or code using natural language prompts. This can be risky if you don’t know what outcomes to expect, and if you aren’t experienced enough to spot and troubleshoot the errors. Just as coders are beginning to ask how many years it will take to clean up after ChatGPT/Copilot, and if they really want their jobs to become ‘debugging bad auto-code’, researchers may regret coming to rely on these hit-and-miss interpreters. But there is no doubt that they are fast.

Early last year, the blog AI snake oil noticed that language models were becoming a key part of the research work flow. They warned that, as well as ramping up productivity, they introduce new vulnerabilities:

Researchers and developers rely on LLMs as a foundation layer, which is then fine-tuned for specific applications or answering research questions. OpenAI isn't responsibly maintaining this infrastructure by providing versioned models. [For example] researchers had less than a week to shift to using another model before OpenAI deprecated Codex.

Just as I have been arguing from a teaching and learning perspective, experts in research infrastructure suggest that that the sector should work towards open, collaborative approaches to model development, specifically to support the needs of scholarship and research: ‘otherwise this space will be left to publishers and the biggest, most highly financed research institutes’.

Sound familiar?

Qualitative research is particularly threatened by the demands of speed, productivity and scale. This fits in well with the withdrawal of funding and the political undermining of the humanities and critical social sciences. But, as this brief overview suggests, the quality of all research may be at stake.

Research in AIEd as an example

Let’s take AI itself as an example. In 2018 – before the current hype cycle had really taken hold – respected researchers in the AI community analysed 400 papers from top AI conferences. They did not find one that had documented their methods in enough detail to allow verification by other researchers. Now, you could see this as a feature of cutting-edge technical research, especially in competitive, commercial environments. This is of course exactly the same argument used by AI companies against regulation. Nobody else is qualified to check their homework.

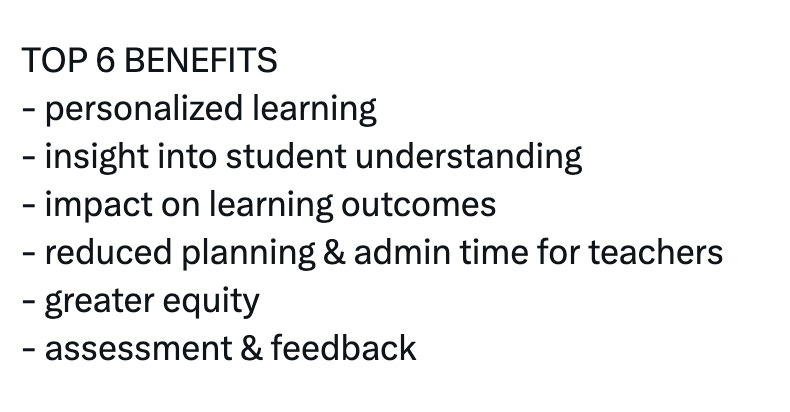

But this argument carries less weight in the field of AI in education, that at least has to account for educational effects in ways that are credible to other educators. A recent (Jan 2024) meta-synthesis review of ‘AI in Education’ found a ‘worrying lack of studies’ that were ‘rigorous’, ‘ethical’, or ‘collaborative’ (which might help resolve some of the methodological issues, for example by providing internal checks, or interdisciplinary approaches). The authors did not include any studies of generative AI because they considered the research field too immature. You may remember my own informal survey of the field back in June 2023 that failed to find any rigorous studies from practice. But in the few weeks since this excellent report was published, I’ve seen it cited on social media as evidence of… the benefits of generative AI in education!

Here is a typical example (no link, to save embarrassing anyone).

I think this also has something to do with the problems of speed and scale. The synthesis review looked at no less than 66 earlier reviews, covering hundreds of component studies of ‘AI in education’. A term that included use cases as diverse as intelligent tutoring systems and support with basic course admin – because the massive hype and citation-boosting potential of the term ‘AI’ means that all kinds of research now has to fit into this space. And while the authors’ judgements were clearly reached through careful evaluation, their mainly negative findings are framed by the quantitative methods they needed to deal with the scale of the data. Methods that provide their own positive spin – because when you count things, you can only add to the pile of things you are counting.

What the list of ‘top 6 benefits’ actually describes is the benefits identified in the review articles. The words ‘mention’ and ‘focus on’ are very widely used in the findings section, to indicate that the reviews did not have to find quality evidence in order to be counted. In fact, of the 17 reviews that ‘included primary studies that evaluated AI’s impact on learning’, only 1 fully and 3 ‘partly’ applied any quality checks to the research they included. The authors found that, among these, ‘studies that provided explicit details about the actual impact of AI on student learning were rather rare’ (they point to just five).

Thanks to these authors generously making their data available, I was able to cross reference ten ‘quality’ reviews with claims of educational benefit. I also dug down into the studies they found that claimed specific benefits to learners. (I’m not comparing my own small dig with the dedicated work of the report’s authors here, but I have recorded and am happy to share my own non-peer-referenced assessments, on request). I found three underlying studies that were well designed and showed modest benefits from the use of learning analytics (LA) data by teaching staff, and five that showed gains in the specialist teaching of surgery. A lack of robust control groups was a major issue in other studies that claimed to find benefits (shout out to all those ‘intelligent tutoring systems’ researchers who report over 19 pages how they developed their own system, and on page 20 how they tried it out on their students).

A 2022 review of ‘AI in education’ (Bearman et al. 2022) reached similar conclusions to the 2024 meta-synthesis, using a much smaller corpus and the qualitative method of discourse analysis. Bearman et al. identified two key themes in the discourse of AI in ed: ‘'imperative response' and 'altering agency'. We can see both these themes operating in the production of research around AI in education. Researchers have to get in quick to catch the wave, and to do that, they have to hand some agency over to quantitative technologies and techniques. And numbers tell their own story. Simpler than concerns about ethics. More direct than nuanced and necessarily circumspect judgements about the quality of other people’s research.

In the end, I came to feel that the synthetic process itself worked rather like a large language model. Hundreds of papers, hundreds of thousands of words, many of them the same words, all designed to achieve maximum credibility for the technologies and techniques they described. After the iterative training runs of literature review, meta-review, and meta-synthesis, we are left with an edifice of coding and counting that is built on evidentiary sand. And for all the great work these authors have done in pointing to the sand, and remarking on its lack of stability and suitability for building on, the edifice still dominates the view.

Research metrics are subject to the same network effects as other data at scale. So the researchers that first claim a field, a new term, or an idea are the ones that get ahead in the citation/search engine game. Once ahead, their citations and re-citations are amplified, regardless of the quality of the work. We end up with a research economy in which predicting and then driving the ‘next big thing’ is all that matters. And this is exactly what transformer models are good for. Their statistical methods were honed in the insurance industry and in market analysis (the link is to an interview with mathematician Justin Joques), where they are used to manage risk and to predict future areas of profit. These logics of prediction and profitability are a perfect fit with a research process already driven by metrics and first-mover reward.

Modelling as method

It is important, I think, not to confuse these generic large language/media models with the specialist data models that are core methods in many fields of research. Some of these are very regularly put out as evidence of AI’s ‘better than human’ performance, as though an off-the-peg language model has just been plugged in and produced the goods. Predicting protein structures is one (Alphabet’s proprietary AlphaFold models rule the roost here). Modelling climate change is another. Medical diagnostics is a third. The models in question are built from specialist data sets, produced over years of research and data gathering. They may use a unique combination of machine learning processes, or unique data representations., suited to the specific problems they address. In the case of protein molecules for example, the underlying structures are represented as spatial graphs. In medical diagnostics, the problem space is inherently a probabilistic one.

And even in these paradigm areas, data modelling is not a magic bullet. Although generative vision models promise to speed up the diagnostic process, a recent review in Nature found that ‘accuracy on diagnostic tasks progresses slower on research cohorts that are closer to real-life settings… many developments of models bring improvements smaller than the evaluation errors’. In protein folding, the wikipedia entry on AlphaFold notes many limitations before it can become the ‘backbone’ of new drug discoveries, while another recent review in Nature found that: ‘while AlphaFold predictions are often astonishingly accurate, many parts do not agree with experimental data from corresponding crystal structures’, emphasising the importance of experimental results. In climate science, modelling has become a highly profitable industry, undermining attempts at international cooperation (for example through various ‘data for good’ initiatives), and currently very much in demand by banking and insurance.

But multiple methods are always used in natural science to tackle tough challenges such as climate change or building vaccines. Scientific models are always embedded into complex workflows, involving a range of methods, instruments, and expertise. And the outcomes are constantly checked against (real world) experimental results. In ‘Demystifying beliefs about natural science in information systems’, Siponen and Klaavuniemi (2021) consider how observation, note-making, the formation of hypotheses and interpretive conversations are all overlooked in the rush to data. This discourages attention to how data models are themselves constituted through human methods of data gathering and intentional design, and in contexts that are as loaded with ‘externalities’ as any other research setting. For example, new relations of power are emerging that concentrate research resources around the models themselves, and can shape future research around considerations of how to generate further value from them.

Beyond the natural sciences, where debates about the value of modelling are warming up, in social scientific research they have been raging for years. The application of data and statistics to social phenomena is as old as statistics itself - for an eye-opening account of the connection between statistics, psychology and ‘race science’ (i.e. eugenics), I recommend this long read by Aubrey Clayton on Nautilus. It matters to us, because Fisher and Pearson were especially concerned to develop ‘objective’ tools for psychology and its applications in education. The famous ‘p-value’ was the result.

Modelling now builds on a wide range of statistical techniques, not all of them in the same state of crisis as the p-value. But all of them abstract from the world of social relations. They substitute data (however this has been derived) for engagement in the field of study, for context and ‘being alongside’, for participative knowledge building, and for developing new and diverse interpretations. All these, of course, are labour-intensive. But modelling as a method doesn’t only benefit from its speed. It benefits from a deep cultural bias towards the veracity, the objectivity, the sheer science-iness of statistical findings, even when their epistemological and political problems are well known. This bias we inherit directly from the work of the early statisticians, who were at pains to present their racial and other discriminatory categorisations as the stuff of pure mathematics: as ideologically untainted.

And finally, to the humanities (my own subject home). Musicians, writers and artists have used algorithms from the start of computation, to expand their thinking and the possibilities for expression.

Computational methods have been used in digital humanities research for decades to uncover hidden patterns and alternative viewpoints on cultural materials. But the expertise, I think, is knowing when the patterns and viewpoints are meaningful ones, when they lead in productive directions, and when they lead to dead ends or dark corners. Creative practice and interpretive research both demand some fine control over the parameters of a query, closing in on what is ‘interesting’ in slow time and with (human) reflection.

Foundation models do not provide these tools to the average user. They outsource the work of parameterisation to engineers, of sense-making and judgement to ‘humans in the data engine’, and of creative composition to the near-instantaneous, probabilistic function – though perhaps it is on a ‘creative’ setting. They do not reveal what is outlying, exceptional or new, but converge instead on what is normative and well known.

Who knows why?

Nature recently interviewed Michael Eisen, editor in chief of eLife, on possible futures for research:

Eisen pictures a future in which findings are published in an interactive, “paper on demand” format rather than as a static, one-size-fits-all product. In this model, users could use a generative AI tool to ask queries about the experiments, data and analyses, which would allow them to drill into the aspects of a study that are most relevant to them. It would also allow users to access a description of the results that is tailored to their needs

The principle of open data publishing is a vital one for transparency in science. But here I think it is running up against a different principle – that data should be understood as the result of investigative work, often practical and empirical, always contextualised by specific problems and theoretical frameworks and cultural biases, as well as by practical constraints such as funding and competition. In Eisen’s world, all data is the same kind of data, just datafied stuff, potentially all available through the same model(s). What looks like openness (data belongs to everyone!) is in fact a refusal to recognise the work or acknowledge the purposes of human researchers.

As Chris Anderson wrote in a celebrated essay for Wired, The End of the Theory, the deluge of data does not just threaten qualitative and creative ways of knowing the world. It also threatens scientific method, wherein data collection and analysis are driven by theories, questions, conceptual frameworks. Anderson foresaw, with both excitement and anxiety, that the triumph of data meant:

Out with every theory of human behavior, from linguistics to sociology. Forget taxonomy, ontology, and psychology. Who knows why people do what they do? The point is they do it, and we can track and measure it with unprecedented fidelity.

Or as philosopher Antoinette Rouvray puts this: It’s no longer about [revealing] what is, but about governing uncertainty.

Funding follows narratives, and here we have a set of narratives about objectivity, accuracy, managing risk and ‘removing human bias’. Although people steeped in statistical theory (Justin Joques for example) have shown these narratives to be flawed, it is easy to understand why research leans towards them. The dropping costs of computation, compared with the costs of training human researchers, of engaging in the messy and increasingly complex real world, is one crude but extremely effective driver. The need to manage risk in an increasingly unstable, crisis-ridden world is another. The Bayesian statistical methods that underlie generative transformer models have been used most intensively in market analysis, where:

‘even the most miniscule of increases in accuracy directly supplies a competitive advantage. In this way, the Bayesian revolution provides a key set of methods for informational capital, allowing the computation of knowledge from data…’

And conferring huge advantages on whoever gets there first.

Sometimes speed counts: in conflict, in emergency responses, in high volume trading. Search heuristics are what allow an algorithm to close in on a solution rapidly. It’s not always the best solution, and by valuing only heuristic approaches, we produce only good enough solutions to problems that are already well established. But as these models converge on statistical norms, they undermine our capacity to respond to unexpected change. They shed outliers, creative possibilities, marginal voices, and leftfield solutions. Solutions to problems in the real world are often not distributed around a norm, but actively in conflict, or at least reflecting different and not-easily-reconciled interests and cultural viewpoints.

If we want to limit the impact of data modelling in research, I think we will have to stop valuing speed and heuristics and re-embrace the complexity of what matters. The benefits of extended practice and slow reflection. The value of deliberation, which is now recognised to produce the fairest, most liveable solutions to complex human problems. Deliberation takes time, and skilled facilitation, and emotional resources, and it often reaches conclusions very different from the ‘mean average’ of what the deliberators thought before they sat down together. Also, we need the ethical grounding that qualitative research provides (to all researchers). Of being with and alongside in order to know, of non-exploitative, non-extractive knowledge work. Acknowledging positions, rather than what Donna Haraway called the ‘god trick’ of imagining a perfect view.

Statistical models can’t be allowed to stand in for every method of coming to know, inside academia and out. Especially vulnerable are the kinds of knowledge valued in cultures other than the technical engineering disciplines that now dominate the disciplinary hierarchy and the innovation economy. Literature, music, making images. The interpretive humanities, critical social science. And beyond universities, the oral, narrative, practical, embodied, sensory, spiritual forms of knowledge that make up the rich cultures in which academic knowledge must negotiate a place and prove its worth.

Helen - we met way back around 2007 or so re a JISC/HEA simulation project I was leading, and I was impressed then with your work. Your blog postings on AI are outstanding - I always look forward to them, learn so much. This post articulates many of my own anxieties about the use of GenAI for research purposes.

Thank you for this. The idea that GenAI really makes us more efficient and produces good or better results, increasingly bothers me. The hype often fails to live up to the lived reality for me.