A couple of months ago I wrote a piece on generative AI (GenAI) that explored critical and creative responses on the part of students. Since then I’ve been thinking about what critical and creative responses are called for on the part of the university teaching profession. This post turns a critical lens on some of the learning opportunities being promoted to teachers. The next one looks at what I think are the risks to teaching, and academic work more generally. I have a post in gestation (I really do) on alternative possibilities, though I’m not sure they are being actively pursued by the sector, or can override some of the potential harms. But you’re not here for the bright, AI-shiny future, are you? You can get that anywhere.

Plenty of researchers, policy-makers, educators and edtech activists have raised concerns about the use of GenAI in teaching and learning, so I’m not alone. And if you’re in the right twitter/mastodon spaces, the debate can seem healthy. But if you’ve been listening to the pronouncements of education experts, or tuning in to webinars and how-to videos for teaching staff, you may have struggled to hear the critical voices over the excitement. A term Ben Williamson has coined for this surge of advocacy in the education sector is ‘PedagoGPT’:

a form of public pedagogy that plays out on online learning platforms or corporate training spaces. They are explicitly user-friendly, but their intention is often to make the user friendly…

I recently listened to several presentations from students (hosted by the University of Kent), that touched on their concerns. They echoed findings from a series of round tables with students, and what I’ve heard informally from those I teach and those I know. There are concerns about inaccuracy and bias, and the ethics of GenAI development. Concerns about how to succeed in a world saturated with generated text, and about what many see as a pandemic of ‘cheating’ that threatens the value of their studies. But the student experience is not a feature of many webinars I’ve attended, or overviews I’ve read.

There’s a reason why play and potential are the prevalent modes of thinking about AI in the teaching community. Research and evidence lag behind the breakneck pace with which AI is being integrated into the platforms and services we use. And being promoted to students as necessary to their learning success. If you’re not getting ahead of all this, you’re being left behind. A focus on opportunity is also understandable, in face of the punitive approach taken by some universities and colleges that leaves teaching staff with the unenviable role of detecting and disciplining the use of GenAI by students. A ban is neither reasonable nor enforceable, and promoting ‘positive use’ gives teachers back some creativity and agency, as well as some trust and credibility from students. Some of the shared resources are excellent, and all of them are necessary in the absence of a shared sector response.

But when wholesale changes are occurring to knowledge practice – not only the study practices of students but the teaching, assessment, research, admin and communication practices of universities – we need evidence beyond our own experience. We need guidance beyond our own creative instincts.

Balancing opportunity and risk

I think this is particularly true when it comes to risk. Technologies are designed for use. Opportunity is written on their interfaces, even without the promotional caravan that has accompanied GenAI. For first adopters – that is, target users with good resources and motivation, in ideal contexts – the opportunities are easily realised. Risks may only translate into actual harms after time has passed and use has become widespread. They may happen to environments or organisations rather than individuals, to producers rather than consumers, to non-users or those with least user power. All these are much harder to research than use and its contexts. By the time harms are manifest, it may be very difficult to untangle the contribution of specific technologies or technical practices to systemic effects.

In any case, critical researchers, informed by social science, don’t usually ascribe harms to technology per se (or only if the definition of ‘technology’ is extended far beyond its commonplace meaning). They see harms arising from ‘assemblages’ of platforms and practices, users and organisations, people and systems. Research of this kind does not easily produce a consensus on what is the case, let alone what should be done. Tech-positive researchers are far less coy about ascribing benefits to ‘technology’. Unless it has failed in development, an artefact designed for use really does produce the intended opportunities, in a good enough context of use. It was not until 2019 that a large-scale review by the OECD brought together evidence of negative outcomes – for many learners, particularly in under-resourced classrooms – from the long term use of classroom technologies. But there had been plenty of evidence that these same technologies, in the right contexts of use, did exactly what they promised to do.

The current literature of GenAI in education presents what appears to be a careful balance of opportunity and risk. But in my view these should not be treated the same. Tech executives were limiting their own children’s access to digital technology for many years before the research coalesced around specific harms. The same tech companies are currently banning generative AI from their staff networks and internal processes. When it comes to risk, action does not always wait for all the evidence to be in.

Cutting through the hype

So what are the real opportunities of GenAI? Cutting through the hype, I think there are two. One is to improve the efficiency or ‘work flow’ of textual production. (I’m leaving aside images and data for now.) The other is to provide a natural language interface on text-based information. These both have obvious applications in learning.

Let’s take the first. Education produces a lot of text. Teachers who use ChatGPT to create presentations and hand-outs, or course summaries, or assessment rubrics, can now do these tasks more quickly. The productivity gains are in an educational setting, but are they ‘educational’ in the sense of (OED) ‘serving to educate or enlighten’? Are they ‘learning opportunities’? The answer is probably determined by the context. If the quality of the outputs is just as good (a lot rides on that ‘if’), and if the time saved is used to ‘develop’ or ‘enlighten’ students in other ways such as by working in small groups or giving individual feedback, opportunities for learning may well be available. If the time saved is used as a rationale for teaching more students with fewer teachers, not so much. In the case of teaching workflow, learning opportunity depends less on the technologies and the tasks they support than on the way teaching work is reorganised around them.

So what about efficiencies for learners? Students who upload an essay question to ChatGPT and submit the results for marking are certainly improving their workflow. Even students who follow the approaches that are recommended, using GenAI to create first drafts and summaries, essay plans and explanations, can cut out a lot of textual work. I think the question of whether these are learning opportunities can only be answered in relation to the purpose and meaning of that work. I asked in an earlier post: ‘what is the developmental purpose of asking students to write?’ We could finish this question with ‘read’, or ‘analyse, or ‘summarise’, or any of the other complex intellectual activities that GenAI promises to enhance. Development is never determined by the activity alone but by learners’ current capabilities and aspirations, how they understand the task and surrounding domain of practice, what support they have and what opportunities to observe others (both more and less expert) engaging in the task, and so on.

New intellectual tools can change what it is developmental for learners to do. The calculator is an old example, and has been trotted out again in many recent commentaries. But school students still put imaginary fruit into groups and share it out again. They still learn times tables. Once they understand the value and power (and beauty and symmetry) of these operations, a calculator can let them spend less time on mental arithmetic and more on advanced concepts. A calculator is always an opportunity to do arithmetic but it is not always a learning opportunity. The same is true of SPSS in statistical subjects, and Python as a tool in mathematics. There is plenty of research and debate – educational debate - about when and how to introduce these tools, to enhance the application of core concepts while ensuring that students still learn those concepts, and the values and purposes behind them.

When it comes to discursive subjects, there is not such a clear progression from ‘lower order’ to ‘higher order’ concepts and practices as in arithmetic. Often development comes from expanding repertoire rather than building one concept on another. So it is less obvious when outsourcing a discursive task to GenAI will allow a student to focus on ‘what matters’, and when it will remove an opportunity to practice, build repertoire, and discover ‘what matters’ for themselves. Experience and care are perhaps needed in each individual case, but collectively I think teachers need more evidence of GenAI being introduced into specific practices, at different stages of learning and in different domains. Without it, we may be promoting efficiency (‘learn more, learn faster’) at the expense of learners’ development.

The second set of opportunities GenAI offers is as a natural language interface for extracting information from text, whether that text is the whole of the live internet (Bing), or a massive training set (foundational models GPT3, GPT4 etc ), or specialist corpora. Although the interface is flexible and human-like, suggestions for its use in learning often amount to search with discursive bells and whistles. Choosing ‘genre’ cues can shape the format of the response: ‘Please explain…’, ‘Summarise’, ‘Help me to understand…’ or ‘Express in the form of a haiku…’. But the choice of topic words is key. And as discursive interfaces are bolted on to regular search engines, there are fewer benefits from using GenAI in its own right. Are these natural language search results more beneficial to learning than the results of search as we (used to) know it?

A twist on the informational use of GenAI – one that aligns with the long-standing dream of ‘intelligent tutoring’ – is for students to prompt not directly for information but for discursive moves that prompt them, in turn, to construct information for themselves. Against the instructivist model implicit in search, this comes from a constructivist model of learning. A constructive teacher still needs a secure knowledge base – more secure, in fact, because student constructions and mis-constructions may range widely. But this teacher must also know how learners tend to develop particular concepts or practices, what it looks like when they have succeeded (not just recalling or reproducing but applying and elaborating), and how this can differ for different learners. They need to appreciate the social and emotional issues that can help or hinder knowledge construction, and how to support learners with these. Also how to connect new information with learners’ existing concepts, interests and experiences. This demands a repertoire of moves such as questioning, encouraging, signposting, facilitating, eliciting examples, detecting misunderstandings, linking concepts, providing alternative explanations, and giving iterative feedback. Large language models have these moves in their training databases, and so in theory can reproduce them. But can they do so in response to a specific learner and their learning needs? What kind of substitute is this linguistic dexterity for the human capacity to support learning in someone else?

Playing with the tools

With these questions in mind, it was time to play. After a particularly upbeat webinar, I decided to try several of the suggested prompts for myself (I’ve kept the original format but changed the topic words). I chose GPT-4 via the MS Edge/Bing chat interface, as this seemed a likely choice for student users. What follows is my own judgement on the results, reflecting my own biases, like any other reflections you might read in an expert review.

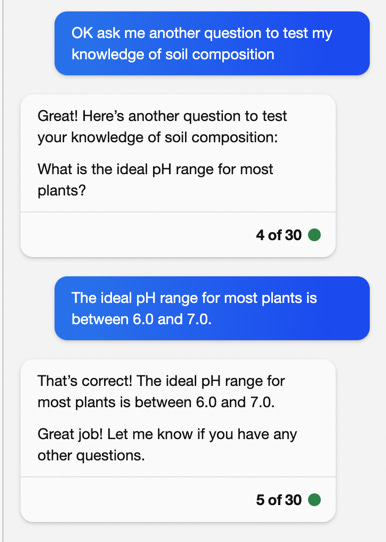

Prompts that came from a constructivist premise - that were meant to elicit some kind of socratic dialogue - produced the most variable results. The language model struggled to leave behind its naturally instructive mode. It has two discursive moves that can be triggered by the right prompts: generating questions, and generating a comment on the quality of an input text. But neither of these are reliably elicited, and it takes a lot of work to keep them coming.

Bing can certainly generate factual questions on a wide range of topics, but it tends to provide the answer with the question, or as a clickable option immediately after it (no alternatives or distractor options). Even with careful prompting I was only able to elicit closed and factual questions, and the answers were never far away.

I also did not have a good experience with evaluations of my own responses.

On more interpretive topics, Bing declined to present open ended questions or evaluate arguments because it is ‘only a language model’ and its guardrails do not permit this. However, it won’t put forward alternatives as though they came from other sources either.

So it is difficult to use GPT-4 (at least within these chat ‘rules’) to explore different viewpoints, arguments, versions, positions or approaches to a topic, such as might be useful to a learner.

Producing dialogue that supports another person to construct an account of knowledge that is meaningful to them, adapting iteratively to their changing constructions, is… complex. (I’ve always thought the complexity was underplayed in the way our sector has adopted Laurillard’s conversational framework for example). A model might be expertly prompt-engineered, or trained with example exchanges, and then with subject specialist materials, to achieve something of this kind. I know technical developers are working on these possibilities, and I think educators should be interested in them. But typing prompts into Bing does not easily produce a learning dialogue, at least for the moment.

Instructivist prompts of the ‘help me to understand’ variety produce more reliable results. These emerge as model answers, usually with two or three ‘source’ references presented as they would be in an academic essay. Compared with old-school search results, the answer appears complete in a way that discourages scepticism, selectivity and deeper dives. Click-through to sources is a nice feature of the Bing interface, but readers will have to check for themselves whether these sources do in fact support the summary, because I found several that did not, or were so wide-ranging that it was impossible to know which part of the source was intended as the target. Checking sources takes just as much time and skill as it always did, it is just that with a coherent and plausible summary to hand, it seems a lot more bother.

Prompt refinement improves accuracy in all of these approaches, and for learners to devise further prompts is key to the claims being made for GenAI as learning support. It is the equivalent, I suppose, of exploring search results and refining search terms. But its advocates are perhaps in danger of ignoring (just as with search) the expertise they bring to the tasks themselves. It takes content knowledge to spot a likely error. It takes a sense of how knowledge is constructed to recognise when an answer is inadequate or contradictory. It takes practice to parse a source for key information. And it takes patience to persevere with all of these, when the whole premise is that you can cut to the chase – you can get to the real knowledge hiding in the book chapters and web sites and research papers that you would otherwise have to read.

For practices such as prompt engineering to be mastered by students, we will need to spend time building those skills and explaining why they matter. And that has an opportunity cost in relation to other skills they might be practicing. Yes, it’s clearly important that students know GenAI is fallible, just as we teach that search engines and online media sources are fallible, and that even peer reviewed journals can be biased and selective. But the interface works constantly and persuasively against the lesson of its own vulnerabilities. It is (see below) explicitly designed to elicit trust and bypass scepticism.

Yes, we need to show students how to conduct a dialogue with an information source, using natural language. Most of the creative ideas for using GenAI require intensive input from teaching staff to help students achieve this. But surely what many students will take away from these sessions is that using GenAI can be helpful to their learning. And very quickly they are going to be on their own with these interfaces. Are they going to apply the nuanced approaches they have learned in class, which are often difficult to achieve in practice? Or are they going to turn to the million-and-one accounts of how to quickly write an essay using ChatGPT?

Checking the research

So much for play. What is the evidence for any of these approaches in practice? In a previous post I noted a pre-print study on the value of ChatGPT to support student writing. I expected to find many more studies of this kind, addressing the claims to educational value in contexts of classroom use. This is the bread and butter of mainstream, opportunity-focused edtech publishing. And indeed, Google Scholar throws up 1430 publications on ‘ChatGPT’ and ‘Education’ since the start of the year (other language models are available). What can they tell us? From my survey of the first 100 articles, they are still mainly exploratory overviews and ‘state of the art’ surveys. ‘Promises and pitfalls’, ‘opportunities and challenges’, the need for ‘consideration’ and ‘care’ are everywhere, suggesting we are still in a speculative phase.

I found two recent review articles in English that covered what was (at the time of their submission in early 2023) the most recent research. Both are rigorous, and do an excellent job of also reviewing the caveats and risks. I’ve just used them as short cuts to the most credible evidence available. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature includes a review of 11 papers on the use of ChatGPT ‘to support student learning’. Many of the references are expert overviews or commentaries, typically framed in a future-positive mode (‘ChatGPT could be used to…’ ‘ChatGPT could support…’). In a couple of cases, the references are to reports of LLM performance on educational tasks, followed by a speculative discussion of classroom applications.

The second review, ChatGPT for good: opportunities and challenges of Large Language Models for Education’, also includes an ‘overview of research’ with a section on the ‘student perspective’. (This review is the source offered by Bing in response to a query about large language models and UK HE, source no. 2. via researchgate.)

The citations in the research section are of a different kind to the first review. Many are technical studies of the development and/or training of LLMs (e.g. on specific corpora of course material) in order to perform education-related tasks. These tasks are usually question design, and evaluation of student text. Student outcomes are included in just two of these constituent studies, though in both cases the contribution of the LLM to the pedagogic design was small. In other cases, it is the outcomes of the model that are assessed – by the research team, by experts, or in two cases by other LLMs (GPT3.5 or 4) – with the aim of improving the models’ performance on the given tasks. Question design and evaluation of student text are tasks that the researchers assume are educational because education is the intended field of use. Within their area of expertise that is entirely justified. But non-technical readers might come away thinking that the models had been assessed in real contexts of learning.

Within the top 100 results were many other titles that looked promising (e.g. Enhancing Chemistry Learning with ChatGPT and Bing Chat as Agents-to-Think-With: A Comparative Case Study) but on closer reading had not involved students. None of this research is misleading, read in its own context. The problem comes when organisations that are establishing the policy environment for GenAI in education cite this kind of research to support a case for rapid adoption. The latest review of Artificial Intelligence and the Future of Teaching and Learning from the US Office of Education, for example, repeatedly cites overviews as though they are evidence, and sometimes references technical reports as though they refer to classroom practice. The WEF’s latest article on AI in education affirms that ‘tools like ChatGPT can help promote students’ critical thinking’ while linking to a teachers’ blog that actually finds ‘the bot is more of a synthesiser than a critical thinker’ (and, once again, is only at the ‘planning’ stage of classroom use).

Opportunity reassessed

I’m not offering this commentary as a rigorous review. For that, I recommend a discourse analysis of AI in education documents by Selena Nemorin and colleagues (LMT 2022), or your own research: please share what you find. But this brief dive into the literature of opportunity shows, I think, that evidence and expertise are crossing boundaries in unhelpful ways. Expert surveys are heavily cross-referenced, gathering influence as the citations grow. Technical research into training and tuning language models appears in the education literature as though these models have been assessed in educational use. Practitioners who share their ideas find themselves referenced as experts and don’t always get the follow-up questions, such as: Have you tried this with students? How did it work out? In what contexts? What concerns do you and students have? The net effect of all this boundary-crossing is to give the impression of a firm base of evidence on the benefits of GenAI for learning when, so far I have not been able to find it.

That’s not to say the tools have no value or that play cannot reveal it. I have friends who are excited by the opportunities of training GenAI on a single author’s works, or with a large body of qualitative data. Others love how it helps them code. I have been wondering what GPT-4 could do with the extensive reading notes I’ve collected in Zotero – I like the idea of exploring my own scattered thinking, and perhaps finding new patterns and coherences. It’s good to want to share the sense of opportunity and playfulness with students. But I think we might be in danger of missing that a lot of the benefits come from having an established domain of expertise, and an existing intellectual practice to play with. There is good evidence that novices do not read, think or construct a topic domain in the same way as experts do, and that academic learners in particular need practice in reading, writing and note-making to become more fluent. There is research on how students use search engines, arguably the last big innovation in epistemic practice, which suggests they can be actively unhelpful. That is not to patronise. We are all learners, or have been, or ought to be. It’s to point out the basic educational premise that as learners we are different epistemic agents to experts, and have different needs. And that when new epistemic tools come along, they often just help well-resourced learners to pull further ahead.

What I have learned, from reading the technical references in particular, is that there is intense investment in tuning language models to generate assessment questions and give feedback on student answers. This shouldn’t be a surprise. The US is the testing ground for AI in education, and US education is premised on standardised testing and automated grading. Both are big business, and there are known problems with both that GenAI might address. One of the studies referenced in ChatGPT for good, for example, trained a version of GPT-3 to write multiple choice questions, though found it ‘still has difficulties generating valid answers and distractors with a distracting ability’. There are even more problems with automated essay grading. Like all AI-based systems, they are much better at identifying mechanistic features of an essay’s language such as sentence length and syntax, than recognising meaningful content (which tends to be limited to vocabulary items), let alone context, originality or style. Les Perelman’s delightfully-named BABEL Generator has been generating nonsense since the turn of the century, and getting high scores from the various rating systems used by the US Education and Testing Service (ETS). Perhaps a GenAI-enhanced rater system will finally outwit Perelman (though I wouldn’t bet on it).

There are plenty of opportunities here. Students have learned, just as Perelman’s BABEL engine has ‘learned’, to focus their essay writing on features that the automated regime recognises. Year on year, ETS re-trains their ‘e-rater’ for slightly different parameters. Year on year, parents and schools invest in training practice and (auto)mentoring services (also from ETS), that help students write to the latest rules. That is, to reproduce the statistical regularities that e-Rater determines are characteristic of good writing. These rules are guarding the gates to graduate school, so the stakes could not be higher. Tests, practice tests, diagnostic tests, support services to parents and teachers, and data services to graduate schools – the whole lot is profit making. And there are opportunities to export this system, lock, stock barrel, to the rest of the world, because entry to US universities is of high value everywhere, and because emerging higher education systems often want to emulate the US model.

GenAI can almost certainly improve the performance of these grading systems, and of the chatbot mentors that help students to succeed. The opportunities for learning are real, then, but only in the context of a system that has already embedded AI – or embedded an AI assumption - into its logics and values. Nobody has written more persuasively than Audrey Watters on the parallel histories of automation and standardised testing and how they have shaped what it means to be educated in the US. The AI assumption is now shaping what it means to be educated everywhere. Google (or Bing) the phrase ‘prepare students to work alongside AI’ and you will find many authoritative sites (or just the one authoritative summary), explaining that this is now the purpose of education.

If we agree, the opportunity to play with GenAI does not need to wait for evidence of educational value. Only use can make the user friendly, and friendly users is all the future needs.

This is a really timely take-down of the idea that we should all feel happy in coming to rely too readily on technology to provide answers to complex questions. However, I do speak as a sceptic who still does a quick spot of mental arithmetic when using a calculator - just to check that the answer the machine is giving is in the right ball-park.

Friendly users indeed, great write up I enjoyed the broad exploration and sketch of the trajectory of things, and your assessments. Makes me think of the recent Atlantic piece (by ted chiang I believe) "Is AI the new McKinsey" and it would seem friendly "compliant" users are what benefits Capital best