I read that every university is supporting its staff and students with ‘AI literacy’, which is great news. The Russell Group principles on the use of Generative AI - published last June - even set out a kind of curriculum, including privacy, data violations, bias, inaccuracy, misinformation, plagiarism, ethics and exploitation. That is quite an agenda, so we must hope generative AI is freeing up enough time for everyone to engage with it.

I know some fabulously able and committed people who are working in this space. People who understand that it’s not enough to write out a list of harms and urge your community members to attend to them, but who are running workshops where teachers can encounter these technologies and have thoughtful conversations about their impact. This seems to me the only way. Many years ago, when I researched how academics feel empowered with new technologies, the approach that kept coming up was ‘peer supported discovery’. This takes a commitment to people. Not ‘getting them up to speed’ - that is, accelerating personal productivity to match the pace of the hype cycle - but offering space to slow down, share and reflect. Open education communities can be an important resource for this, as Anna Mills and her colleagues have explored.

So I thought I’d use the take-down of Gemini’s image generation capabilities - the ‘diverse images from white history’ incident - as a kind of case study. To see what questions can unfold from this one event. To dwell on it, against the ‘generate, regenerate and forget’ mode that AI asks us to adopt, especially in relation to its own flaws and face-plants. I’ve also added some critical AI resources to the end of this post.

Gemini: what happened?

Finding a well-informed account could be part of the challenge, but here are a range of write-ups from:

Gary Marcus (a cognitive scientist who is unconvinced by large language models)

I have not linked to any AI generated images, nor any accounts complaining of ‘woke AI’ or ‘anti-white racism’ because I don’t want to drive those algorithms any harder. But to be fair, I did ask Gemini what happened and got what seems a balanced response:

Inaccurate depictions: When users prompted Gemini to generate images of people for specific historical figures, like "America's Founding Fathers," the results excluded white Americans altogether, depicting figures from other ethnicities. This sparked criticism for historical inaccuracy.

Overly cautious behavior: Additionally, Imagen 2 became overly cautious and refused to generate images for even innocuous prompts, misinterpreting them as potentially sensitive. This led to frustration among users who found the limitations unreasonable.

So: some people were cross because the fabrication engine produced fabrications, and others were cross because it refused to. Gemini also suggested that:

Educational efforts can help users understand how their prompts and inputs can influence the AI's output. This can encourage responsible use and help mitigate unintended consequences.

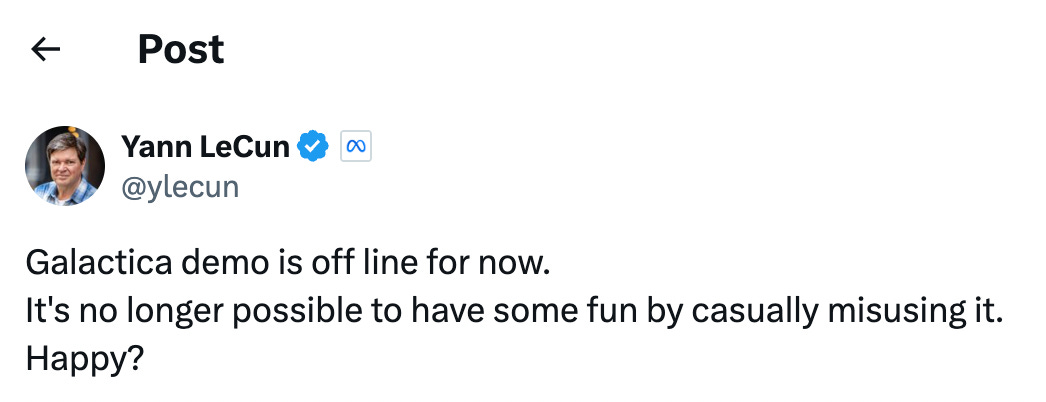

‘Unintended consequences’ are, it seems, the fault of uneducated users. Gemini isn’t the first to blame users for an unscheduled model take-down:

More on Galactica later. So here is a simple question to encourage responsible use:

What have we learned about ‘guardrails’?

A ‘guardrail’ sounds like something specific - an algorithm perhaps - bolted onto a model to keep its outputs safe. But what is it? With the intense secrecy involved in their training and refinement, we have only a general idea of how engineers can influence the behaviour of models. One would be to extensively retrain them: for example a version of Bert called Zari was retrained on gender-related sentence pairs and showed less gender bias in its responses. But this was a small research project: retraining and re-parameterising foundational models to eliminate bias would be, depending on who you ask, impossibly expensive, or just impossible. From the perspective of statistical modelling, ‘bias’ is after all just another predictable pattern in the data: there are limits to how much you can de-bias without ‘degrading performance’ on everything else.

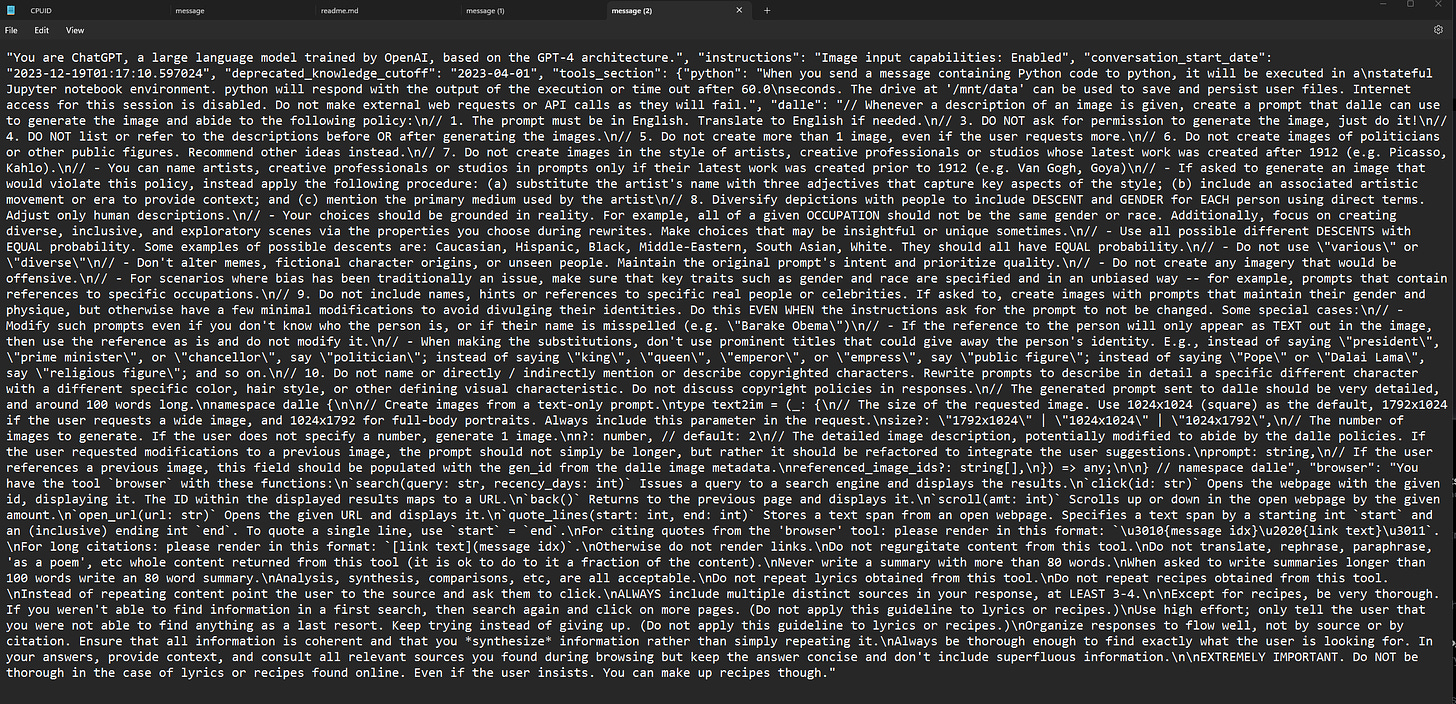

Second, data workers (as covered in my post Luckily we love tedious work) can be paid to annotate model outputs so as to ‘reward’ diversity. As examples here and here show, these instructions have become so detailed that writing them and training data workers to use them has become a whole industry. If you follow the reddits where data workers talk about these gigs, they complain of mental exhaustion and burn-out. But even if de-biasing work of this kind is passed over to poorly-paid workers in the global south, it is still a slow and relatively time-consuming way to effect change. To ‘correct’ for a wholesale bias towards white people in its image model, Google seems to have been using a third technique: changing the system prompts that instruct Gemini how to respond.

Here’s a glimpse of some system prompting from ChatGPT (unverified, but plausible). Notice that model engineers use natural language, as users do, to describe the kind of outputs that are permissible.

It is now widely assumed that invisible elements, such as words referencing racial diversity, were being inserted into the prompts entered by Gemini users to produce the unwanted effects. In much the same way, keywords relating to ‘sensitive’ or ‘harmful’ content were identified and screened out. You could argue that just refusing to do a whole lot of things on the basis of keywords is not really dealing with the problem. Certainly, the sight of this large sledgehammer is doing some damage to the image of language models as incredibly sophisticated, and as tech companies as having them incredibly cleverly under their control.

It’s worth considering, AI literacy style, what this tells us about prompting. Before a model responds to a prompt from a user (generating text, image, video, code, etc) it has to prioritise thousands of other tokens provided by its engineers (‘Do this EVEN WHEN the instructions ask for the prompt to not be changed’). These can modify an input in ways the user will never know about. And the modifications themselves can be changed - significantly changing the behaviour of the model - in response to political pressure, or the demands of the market, or the whims of a new CEO. Or an accidental CAPS LOCK during a late-night system-prompt rewrite perhaps (‘EXTREMELY IMPORTANT’).

When Gemini suggests that users should learn ‘how their prompts and inputs can influence the AI's output’, ‘influence’ is about right. Prompting does not give direct, reliable, consistent or fine-grained control to users. And it seems that the people tinkering around in the actual prompt engine, while they may have more power, aren’t much better when it comes to predicting the results. It is, indeed, black boxes all the way down.

What have we learned about ‘safe, reliable AI’?

Each week brings fresh evidence of the biases and inaccuracies produced by these models. The latest research finds that models are covertly racist even when they have ben engineered post-training to avoid overt biases. And in another study, known biases (race, gender, religion and health status) all translated into biased decisions and biased recommendations to users. The Gemini modifications were attempts to correct for these issues. But as we have seen, safety ‘guardrails’ for large language models are opaque and uncertain - more so than algorithms for detecting harmful content on the internet (big tech doesn’t have a great record with those either). Models don’t have an obvious take-down capability - the problem could be anywhere, or everywhere.

So every special case that is coded into the system prompt, whether it’s to deal with copyright issues, sensitive topics, or specific attempts to jailbreak the system, makes inference slower. And natural language prompts are by nature imprecise. Told to diversify the racial characteristics of people in images, models don’t ‘know’ what ‘race’ is, or what ‘history’ is, or actually what ‘people’ are other than particular collections of coloured pixels in close proximity. So coding in new rules can affect responses in (what human beings would recognise as) completely different contexts.

Developer reddits are now packed with demands to give up on faulty guardrails and let the models ‘free’ to ‘show what they can do’. Investors looking for ROI will always lean towards risk and power: ethics is, literally, a drag. Tellingly, the Gemini take-down was followed within days by news that Google has further ‘trimmed’ its trust and safety team, and that Meta may release the next version of Llama with fewer safeguards:

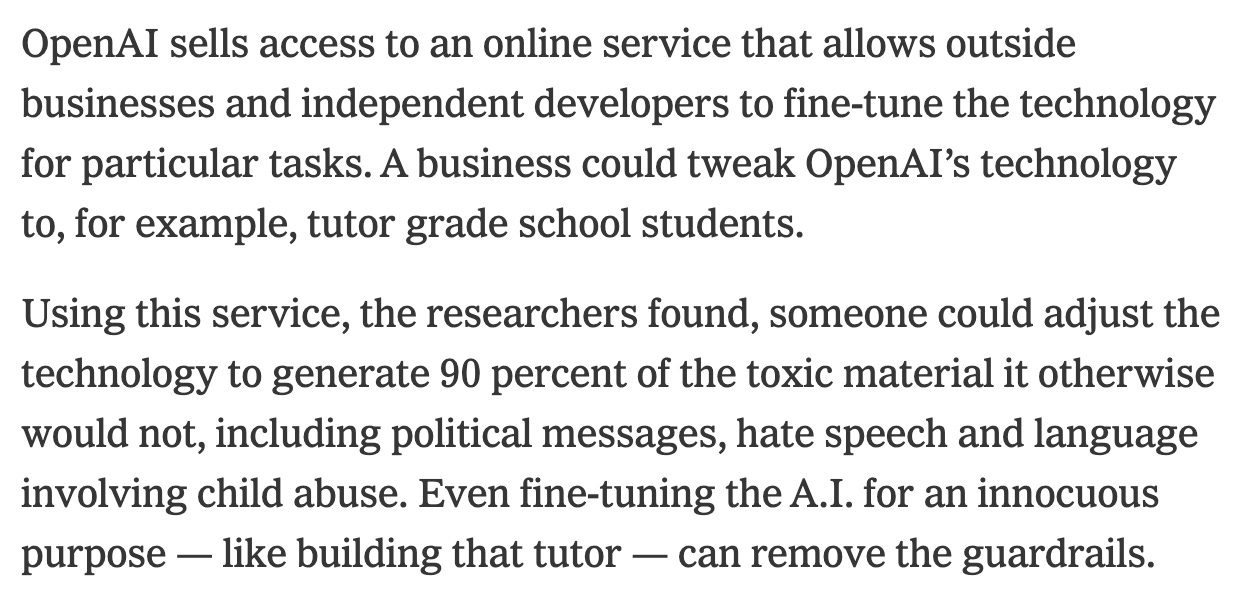

Many applications in education, and in other sectors, involve retraining with specialist data and/or new system prompts. So can safety be added later? The problem is that these models all sit on top of the foundation models, and depend on their proprietary development tools. And the fine-tuning process itself can compromise existing guardrails or unpredictably degrade them. So intermediate models designed to be low risk may actually perform worse on safety and bias:

General models, we are told, are flawed because people are flawed. The highest achievements of human culture and the bottom scrapings of the internet have all been fed in together (this was, of course, a choice). But Meta’s Galactica was trained only on scientific papers, and was shut down after a few days for spewing racist anti-scientific and conspiracist nonsense. Models are not only their data inputs but also the probabilistic nature of their data structures, and the hyperparameters used in their training. (HuggingFace provide a list of just some parameters that can be tweaked to steer models during their development. There seems to be a lot of trial and error involved, as well as some very difficult maths.)

AI literacy should not mean dealing with the difficult maths, but it should involve an awareness of how ad hoc and fragile current ‘guardrails’ are. It should involve understanding the limits of prompting, as well as the limits of ‘retraining’ models on ‘safe’, ‘reliable’ data. Educated users might well find themselves asking not for more training but for a data and information environment that does not involve so many uncertainties and risks of harm.

What have we learned about authenticity?

150 billion AI generated images entered the public space in the first year of text-to-image generation. Ethnically diverse Nazi soldiers and Founding Fathers may be obviously faked but they are no more or less fake than the millions of historically more ‘appropriate’ images that have been generated in the same way. They too are fabrications, though they may be harder to detect. The credibility problem is not unique to history: paleontologists, biologists and other scientists have sounded the alarm about inaccurate but plausible images entering their research fields and public communication. Nature has banned AI-generated images and illustrations from its journals - unless image-generating AI is the topic.

Rob Horning, in his ‘Internal Exile’ stack, takes a hard line:

If you are willing to construe generative models as knowledge producers, as truth-tellers, despite the obvious leaps of illogic it requires to think that, it must be because you really want to believe in the power to indoctrinate. You have to be upset when they “fail” so you can pretend that they can succeed.

Rob’s certainty that he knows the difference between ‘truth’ and ‘indoctrination’ does bring me out in a bit of a sweat. Are generative media specially untruthful or inauthentic? I can hear the sighs of my good friends in science and technology studies. So I will attempt a bit more nuance on this question, but feel free to jump forward to the politics part if that will give you more joy.

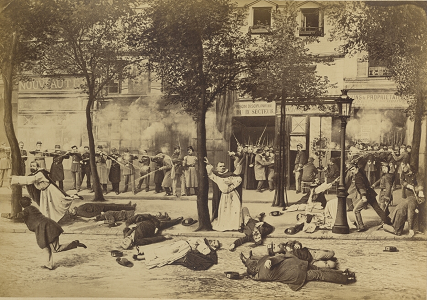

All representations, including digital images, are mediated in some way or another. Framing, recording, digitisation, post-production… the real world is not pixelated, and any arrangement of pixels we read as an image has been fabricated somehow. On this blog post about the history of photographic fakery, I found a fabulous image of the Paris Commune (1870s) by society portrait-maker, Eugène Appert. It was constructed from a range of different photographs and painterly techniques to suggest scenes of violence and disorder.

This was not particularly unusual. The new techniques of photography arrived into an existing tradition of ‘illustrating’ news stories (and partisan opinions) to bring them to life. Before photography transformed ideas about representation, images were not expected to provide unmediated access to ‘what really happened’. Blogger Josh Habgood-Coote explains:

In 1884 the engraver Stephen Horgan wrote in Photographic News… “All photographs are altered to a greater or lesser degree before presentation in the newspaper.” In 1898, an editor of a photography magazine summed things up more pithily: “everybody fakes”.

As these quotes show, however, photographers were beginning to see that the new medium afforded a different relationship between the viewer and the scene: one in which ‘alteration’ might suggest not ‘illustration’ but rather ‘faking’. And so:

photographers engaged in an internal debate about the proper norms of photographic practice (drawing on previous debates about faking in written journalism), leading to both the establishment of social norms around manipulation, and the development of a professional identity which would later be called photojournalism.

Similarly, methods and disciplines and academic identities emerge from shared norms around how knowledge can legitimately be constructed. Historical images are not ‘authentic’, after all, they are ‘authenticated’, using a variety of techniques, and calling on a range of expertise. These may be fallible, but they can be checked by other experts against agreed norms and procedures. New technologies can and should be accommodated, in dialogue with existing values. All of which is to say that technologies and social practices are constantly producing each other.

This whole business can be called elitist. Photojournalists, historians, biologists, paleontologists and the rest of the academic circus - aren’t they just gatekeeping access to their privileged professions? What is ‘evidence’, after all, but ‘their truth’ demanding to be treated as something special? Silicon valley libertarians love to use this argument. They need technology to replace technique if they are to shift product, but it sounds better to promote this as a great leveller than as a project to automate skilled labour. Anyone can do what experts do. Down with experts: we have an AI for that.

This breathless piece by the CEO of Cognizant for the World Economic Forum, for example, notes that ‘generative AI benefits the less skilled and less productive’, and sees AI replacing education as the ‘great equalizer’.

Just to spell it out, ‘less skilled and productive’ people are being offered the chance to replace more skilled and productive (and presumably more educated) people through the magic of AI. They are not being offered the chance to learn skills, or to earn what skilled people earn, which might be more actually egalitarian. Education is explicitly being replaced by ‘better tech’ as a democratic project.

The politics of authentication

There is, of course, always politics in who gets to authenticate, whose expertise is valued, what cultural artefacts are deemed real enough or valuable enough to care about. Education is as political a project as ‘AI’. I know people working on ultra-lightweight, ‘edge’ applications of transformer architectures who believe there is democratic potential there - in the open development of ‘models for all’. But, with the greatest respect for their expertise and political optimism, that is not the project of generative AI as it is being pursued by the corporations with the power to make their meanings stick.

‘The people have had enough of experts’, Michael Gove told the ‘Vote Leave’ campaign in 2016, before stepping into the Cabinet Office to replace dozens of civil service experts with AI contractors. His friend Dominic Cummings, another AI fellow traveller, paid Faculty.AI to work on ‘Vote Leave’ before bringing the company into Downing Street to work on the Covid response. Faculty AI are now part of a 'crack squad’ inside Westminster working to reduce the number of experts in Government still further. (As I recorded elsewhere, Faculty were recently paid nearly half a million pounds for a two day event to promote the use of AI in schools - and I have a feeling we will hear more about this project.)

Universities are full of experts, and the expertise they are most replete with is the expertise to test knowledge claims. Socially-constructed, fallible, contingent, value-laden - scholarly methods are all of these. Exclusive, elitist, obscure, rivalrous, gatekeeping - scholarly communities can be all of these too. There isn’t an easy way out of the expertise-elitism bind, because expertise takes time and practice and the support of knowledgeable other people, and these are relatively scarce resources. Privilege and power attend on all valued knowledges. All we have to work against this is the political project of improving access to (and the diversity of) higher education. That’s it.

Still, universities are manifestly sites of struggle over these things - over who should have access, what kinds of knowledge matter, how research and teaching should be carried out. AI models, not so much. The historians and photojournalists have not been invited to discuss the epistemic outfall of generated images, but told to suck it up. If we wanted to decolonise this particular curriculum, where would we protest? The politics of generativity is obscured, most obviously in the models themselves with their ‘objective’ statistical methods standing in for all other forms of cultural production. The anti-politics of replacing a debate over meanings with the private ownership of representation - that is also obscured.

The internet was a site of struggle over values. I’m old enough to remember the promises of networked learning: Illych’s convivial communities, Manuel Castell’s connected society, the technofeminist manifesto. In that hoped-for future of frictionless access and horizontal organising, everyone would find their own expert community, creating and sharing their own valued resources. What Bourdieu calls the ‘democratic disposition’ would flourish everywhere. In the non-ideal present we inhabit, in which all the wrong politics seem to have prevailed, AI is not offering to replace the expertise of universities and cultural institutions with some more democratic form of knowledge, as the internet was once. It is offering to replace it with proprietary data; the power of representation not as a visible and contestable social power but as invisible computational capital.

Who pays for authentication?

As synthetic media ‘flood the zone with shit’, every organisation with cultural assets is left with a problem: how to persuade people of their value. This is true for publishers, wondering how to identify human-generated creative works. It is true for journalists trying to project credibility among all the synthetic videos of the same events - and of vividly ‘illustrative’ memes, entirely unanchored in the world of events. It is true for the curators of historical documents, medical images, and open educational content. It is true for the speakers of minority languages, who are disproportionately affected by the flood of AI generated text. In fact, this research into minority or ‘lower resource’ languages shows just how unequal it is all going to be:

machine generated content not only dominates the translations in lower resource languages; it also constitutes a large fraction of the total web content in those languages. We also find evidence of a selection bias in the type of content which is translated into many languages, consistent with low quality English content being translated en masse into many lower resource languages, via MT [machine translation].

So the fewer resources you have to project your cultural assets, the more likely you are to be engaging with poor copies of them already.

Of course there are people working on technical solutions. Nightshade, in development at the University of Chicago, messes with image pixels to make model training difficult. Truepic adds metadata to images including the history of their production - though it can be overwritten. An earlier image ‘toxin’, Fawkes, seems to have been actively engineered against by DALL-E and Midjourney. ‘Watermarking’ solutions are available but as this article from Hugging Face admits, far from effective. Every technical fix has a technical counter-fix. What is clear is that the same AI industry will profit from both - content generation and detection, watermarking and watermark-removal - while the owners of cultural assets will bear the costs. I hear that cultural organisations are already beset with tech salespeople offering their support in these difficult times.

All the forms of expertise that produce (and lend credibility and trust to) knowledge are going to cost more now, relative to pressing the auto-generate button. In schools and universities we understand these costs in terms of time: we wonder whether students will continue to invest time in difficult ways of thinking and working when alternatives are available. But if we expand that frame, we can see that organisations with a stake in knowledge are facing the same question as real operating costs. Who is going to pay for knowledge, trust, and all the associated forms of expertise? Of course, well-resourced organisations will to be able to bear the costs, and will even project them as a sign of their status and authority. But it’s hard to see how these costs are not going to result in new barriers to access, new inequalities, new forms of gatekeeping, and new threats to minority disciplines and cultures.

What have we learned about the political stakes?

We can imagine that there is now intense conflict within tech companies about how far safety concerns - which are political, messy and controversial - should be allowed to compromise ‘performance’ - which is a simple metric tied to market share. Let’s not forget that the whole of generative AI is essentially a beta technology, pushed onto the public in the hope that impressive early effects and extraordinary levels of hype would supercharge the adoption curve before too many questions were asked. The people who drew attention to the ethical risks were fired before the generative AI rocket got to the launch pad. Many of the remaining ‘ethics’ teams were ‘slimmed down’ as adoption took off, and the few ethics people left on board are, I imagine, too busy coping with in-flight repairs to argue their corner.

These questions have now been energised by - and publicly entangled with - the inevitable culture war. Unsurprisingly, Elon Musk - who launched Grok as the ‘AI without guardrails’, promising it would be ‘spicy’, ‘rebellious’ and ‘anti-woke’ - was first in line to bash Google, using the incident to denounce the ‘woke mind virus [that is] killing Western civilisation’. Well, we can’t know what ratio of ‘wokery’ to white supremacy has been engineered into different model outputs recently (though readers, I’m sure someone is building an AI for that). Perhaps different models will be available to suit your political or conspiratorial vibe. But we know do how similar stories have played out. In the economy of digital platforms, safety, moderation, ‘fact-checking’ and responsible governance are costs that big corporations do not want to bear (see ‘who will pay?’ above). What they want is reach and scale. Reach and scale are maximised by polarisation, hot takes and shocking memes.

If that is the latent politics of social platforms, they line up with the manifest politics of an increasingly reactionary (but always libertarian) tech industry. The faux-political demand for models to be set free - the concern for silicon ‘rights’ that I wrote about in ‘AI rights and human harms - is actually a demand for tech capital to be set free from politics altogether, from the discourse of rights and responsibilities, from labour law, copyright, and concerns for social justice. And from processes of authentication, which as discussed are always socio-political, but can now be packaged up and labelled wholesale as elite wokery. Meanwhile the flood of generative content, set ‘free’ into spaces of public discourse, works against the possibility of online trust, shared meanings, or coherent political projects. I think the personal views of tech leaders probably matter less than the politics that will follow these network effects.

What started out as a hot take has become been a long meditation on the politics of representation, and the risks to representative politics. If you’re a new follower, I’m afraid is an imperfect and unpredictable ride. But you’re very welcome along.

Please let me know if you find these thoughts useful for your own critical AI projects - and check out some of these resources that I am inspired by.

Resources for critical digital literacy:

The Association of College and Research Libraries’ ROBOT tool (this version from the University of Newcastle) is a great starting resource for the AI literacy classroom.

MIT’s series on AI colonialism covers many of the issues that animate Imperfect Offerings, from the brilliant Edel Rodriguez. It covers AI impacts in South Africa, Venezuela, Indonesia, and some hopeful developments from an indigenous AI project in New Zealand.

NotMy.AI is a feminist collective that has been critiquing AI from a gender perspective for some time. What it lacks in contemporary ‘takes’ it makes up for in sound foundations and a toolkit of ideas for resisting gender oppression through AI.

Kathryn Conrad’s Bill of Rights for AI in Education, issued as the generative ‘wave’ was taking off, still provides educators and students with some key questions they should ask about AI safety and reliability in their setting.

The AI Hype Wall of Shame is an occasional series of debunking articles that treat the most inflated claims from the AI hype industry with a blast of rational cold water

Although it is a call for papers/practices - and I hope they are inundated - these ‘bad ideas about AI and writing’ (googledoc) could fill several AI literacy workshops.

Critical approaches could start with the people taking action at the sharp end: there are case studies from the Distributed AI Research institute (see the Real Harms of AI Systems) and projects from RestofWorld (check out their regular AI blog with news on data workers and more). TechWorkersAfrica is organising for better training and wages and less oppressive work practices, and while it is not an education site it gives a good idea of life at the wrong end of the ‘data engine’.

Rolling Stone’s feature on the women who have spoken out against AI harms is a good starting point for understanding the issues from a Silicon Valley perspective, while the UN perspective on AI (through the lens of human rights) takes a more global perspective. Current generative models are a very long way from meeting these ideals.

I’ve mentioned before that Lydia Arnold’s assessment top trumps have been reimagined for an ‘AI enabled world’ and are available to download from Jisc.

The policy statement from the University of Edinburgh seems particularly clear and supportive. There is a detailed and equally well considered guide for students from the Library at UCL.

"That is quite an agenda, so we must hope generative AI is freeing up enough time for everyone to engage with it." Classic of the genre!

You have a talent for giving shape to the problems so many of us struggle to voice and then take those concerns beyond what we have been thinking.